The Complete Beginner’s Guide to Context Engineering

Optimizing AI Responses Through Precise Context Design

When people think about improving AI systems, they often focus on making the model itself smarter or crafting clever prompts. In reality, the biggest difference between a basic AI demo and a truly impressive AI product often comes down to one thing: context.

If you want an AI to perform well, it needs the right information, the right tools, and the right format before it even begins answering. This process is called context engineering, and it is becoming one of the most important skills for AI engineers today.

What is Context Engineering?

Definition:

Context engineering is the discipline of designing and building dynamic systems that provide the right information and tools, in the right format, at the right time, so that a Large Language Model (LLM) can plausibly accomplish the task.

In simpler terms, context engineering is about setting up an AI for success by making sure it has everything it needs before it starts working.

Most of the time, when AI fails, it is not because the model is incapable, but because it did not receive the right context. If the input is incomplete or poorly structured, the output will also be poor.

Context Engineering vs Prompt Engineering

Prompt Engineering focuses on crafting a single instruction or question for the AI. This was common in early AI applications. For example:

"You are a helpful assistant. Please summarize this text."

Context Engineering is broader. It focuses on the entire system that gathers, formats, and delivers all relevant information to the model dynamically. This includes:

Instructions

History of interactions

Retrieved knowledge from external sources

Available tools the AI can use

Prompt engineering is a part of context engineering, but context engineering covers the complete pipeline that builds the prompt each time.

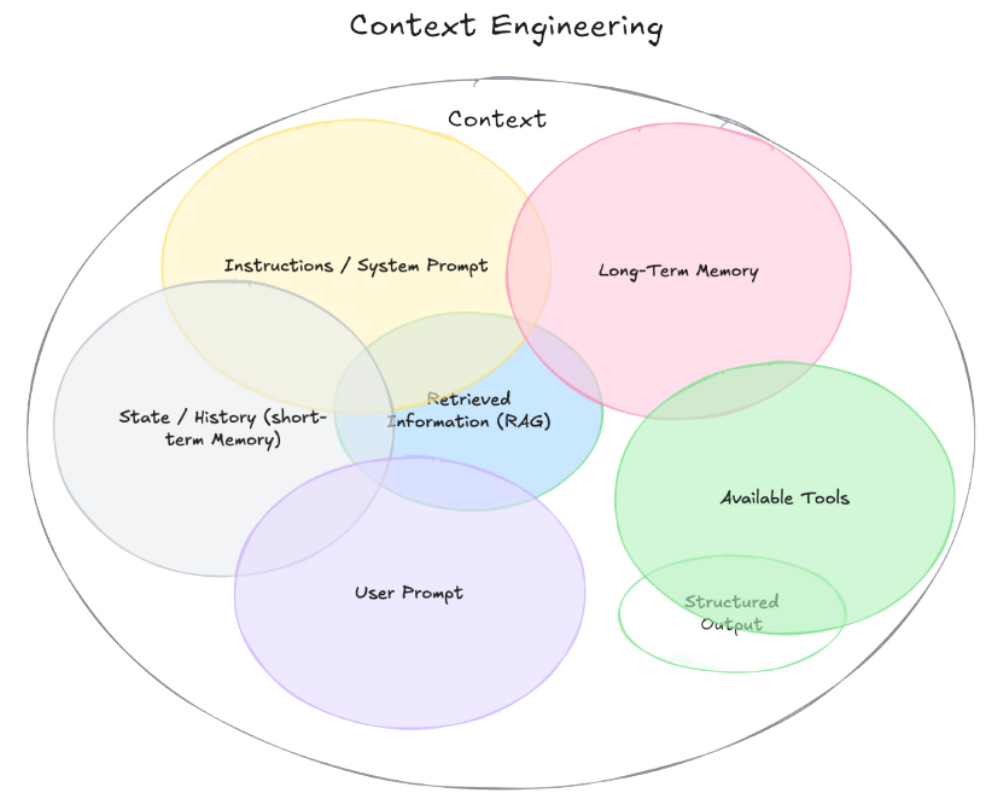

What Counts as “Context”?

In AI, context is not just the user’s question. It includes everything the model sees before generating a response.

Key components of context include:

Instructions / System Prompt

Rules and guidelines for how the AI should behave, possibly with examples.User Prompt

The specific question or request from the user.Short-Term Memory (Conversation State)

The recent conversation or interaction history.Long-Term Memory

Persistent knowledge from past sessions, such as user preferences or project details.Retrieved Information (RAG)

Facts are pulled in from documents, databases, or APIs just before responding.Available Tools

Functions or APIs the AI can use, such as sending an email or searching the web.Structured Output Requirements

Specifications for how the response should be formatted, such as JSON or Markdown.

Real-Life Example: Cheap Demo vs Magical Agent

Imagine you are building an AI that schedules meetings.

Cheap Demo Agent

Sees the message: "Hey, are you free for a sync tomorrow?"

Has no calendar access, no knowledge of your relationship with the sender, and no tone preferences.

Responds:

"Tomorrow works for me. What time do you prefer?"

Magical Agent

Before replying, it gathers:

Your calendar data (shows you are fully booked tomorrow)

Email history with the sender (you use a casual tone)

Contact list (identifies them as a key partner)

The tool to send an invite

It replies:

"Hey Jim! Tomorrow is packed for me, but Thursday morning is open. I have sent you an invite. Let me know if that works."

Both use the same AI model. The difference is that one had rich, relevant context.

Why Context Engineering Matters

AI can fail for two main reasons:

The model itself is not capable enough.

The model was not given the right context.

As models become more advanced, reason number two is more common.

Typical context problems include:

Missing important information

Poorly formatted input data

Overloading the model with irrelevant details

Common Context Challenges

When working with long-running AI agents, you may face these problems:

Context Poisoning – Incorrect information enters the context and misleads the AI.

Context Distraction – Too much irrelevant data reduces focus.

Context Confusion – Poor formatting or unclear structure causes misunderstanding.

Context Clash – Conflicting information leads to inconsistent results.

Core Strategies for Context Engineering

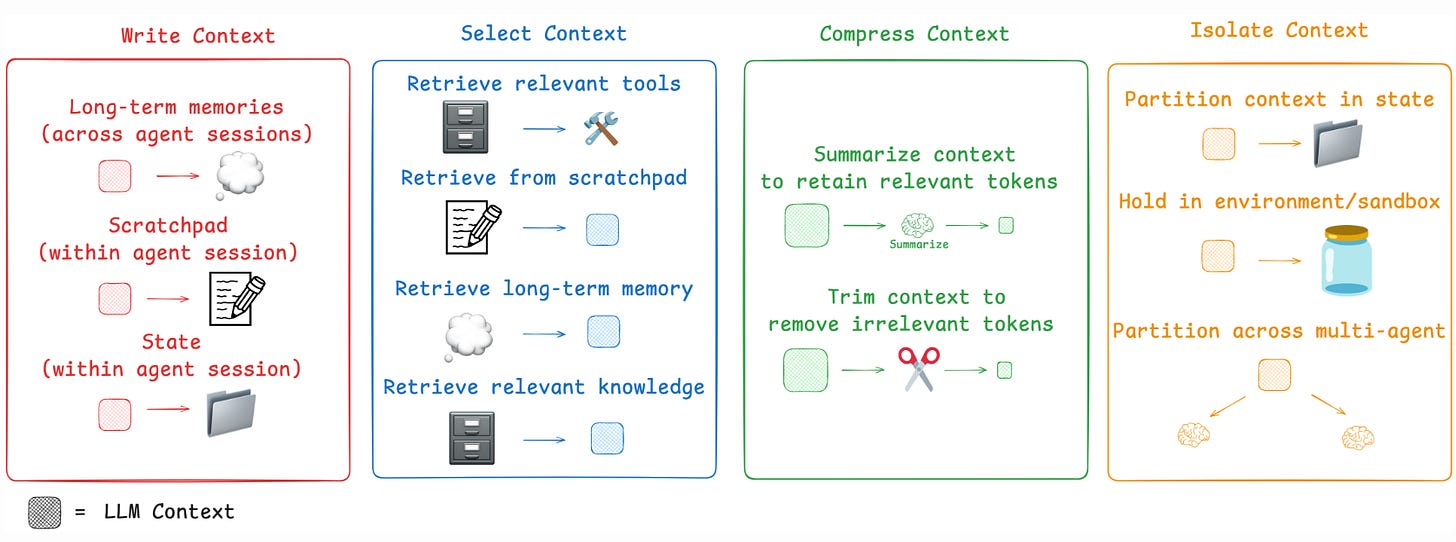

Successful context engineering usually involves four main strategies:

1. Write Context

Store important information outside the model’s current memory so it can be reused later.

Scratchpads – Temporary notes during a task.

Long-Term Memories – Information stored for use across multiple sessions.

2. Select Context

Choose only the relevant information for the current step.

Retrieve the right memories using embeddings or keyword search.

Fetch only the most relevant tools for the task.

Use retrieval-augmented generation (RAG) to bring in external data.

3. Compress Context

Reduce token usage without losing important details.

Summarization – Turn long histories into concise summaries.

Trimming – Remove old or irrelevant data.

Hierarchical Summarization – Summarize in stages for clarity.

4. Isolate Context

Break tasks into smaller, more focused environments.

Multi-Agent Systems – Each agent handles a specific sub-task with its tools and memory.

Environment Isolation – Run heavy processes outside the LLM and feed back only results.

State Management – Keep structured data separate and reveal only what is needed.

Everyday Analogy

Think of context engineering like preparing a chef to cook.

Without context engineering:

You give the chef one ingredient and say, "Make something."

With context engineering:

You give the chef the full recipe, the right tools, prepped ingredients, and knowledge of your preferences.

The second scenario almost always produces better results.

The Future of Context Engineering

Leaders like Shopify CEO Tobi Lutke and AI researcher Andrej Karpathy believe context engineering is the real skill that will define AI development.

In the future:

AI engineers will be evaluated on how well they manage context.

AI frameworks will compete based on context handling capabilities.

Businesses will see more "context failures" than "model failures."

Key Takeaways

Context engineering is essential for building reliable AI agents.

It is about systems, not just single prompts.

The right information, tools, and format can turn an average AI into an exceptional one.

Poor context leads to poor performance, regardless of model quality.

If prompt engineering is about writing a clever question, context engineering is about designing the entire setup, giving the AI all the resources it needs, and ensuring it is ready to perform at its best.

Until next time, stay curious. —M